Interested in a finetuned Model for your organisation? Contact us here.

fine-tuning large language models (LLMs) has become a pivotal process in the field of natural language processing (NLP), enabling organizations to tailor these powerful AI tools to meet specific needs and enhance performance in various applications. This blog post will explore the intricacies of fine-tuning LLMs, why it is essential, what the process involves, and provide practical examples across different domains.

Understanding Large Language Models

Large language models, such as OpenAI’s GPT series and Google’s BERT, are designed to understand and generate human-like text. These models are built on advanced architectures, primarily the transformer architecture, and are trained on vast datasets, allowing them to grasp the nuances of language, context, and semantics.

Key Characteristics of LLMs:

- Pre-training: LLMs undergo extensive pre-training on diverse text data, learning to predict the next word in a sentence based on the preceding context. This phase equips them with a broad understanding of language.

- Versatility: LLMs can perform a variety of NLP tasks, including text generation, summarization, translation, and sentiment analysis, making them highly adaptable to different applications.

- Contextual Understanding: They excel at generating coherent and contextually appropriate responses, which is crucial for applications like chatbots and virtual assistants.

Why Fine-Tune LLMs?

Fine-tuning is the process of taking a pre-trained model and further training it on a smaller, domain-specific dataset. This process is vital for several reasons:

- Specialization: While pre-trained LLMs possess general language understanding, fine-tuning enables them to excel in specific tasks or industries. For instance, a model fine-tuned on legal documents will perform better in legal text analysis than a general model.

- Improved Performance: Fine-tuning significantly enhances the model’s accuracy and relevance in its designated task, leading to better outcomes in applications like customer support, content generation, and data analysis.

- Cost-Effectiveness: Training an LLM from scratch is resource-intensive and time-consuming. Fine-tuning allows organizations to leverage existing models, saving both time and computational resources.

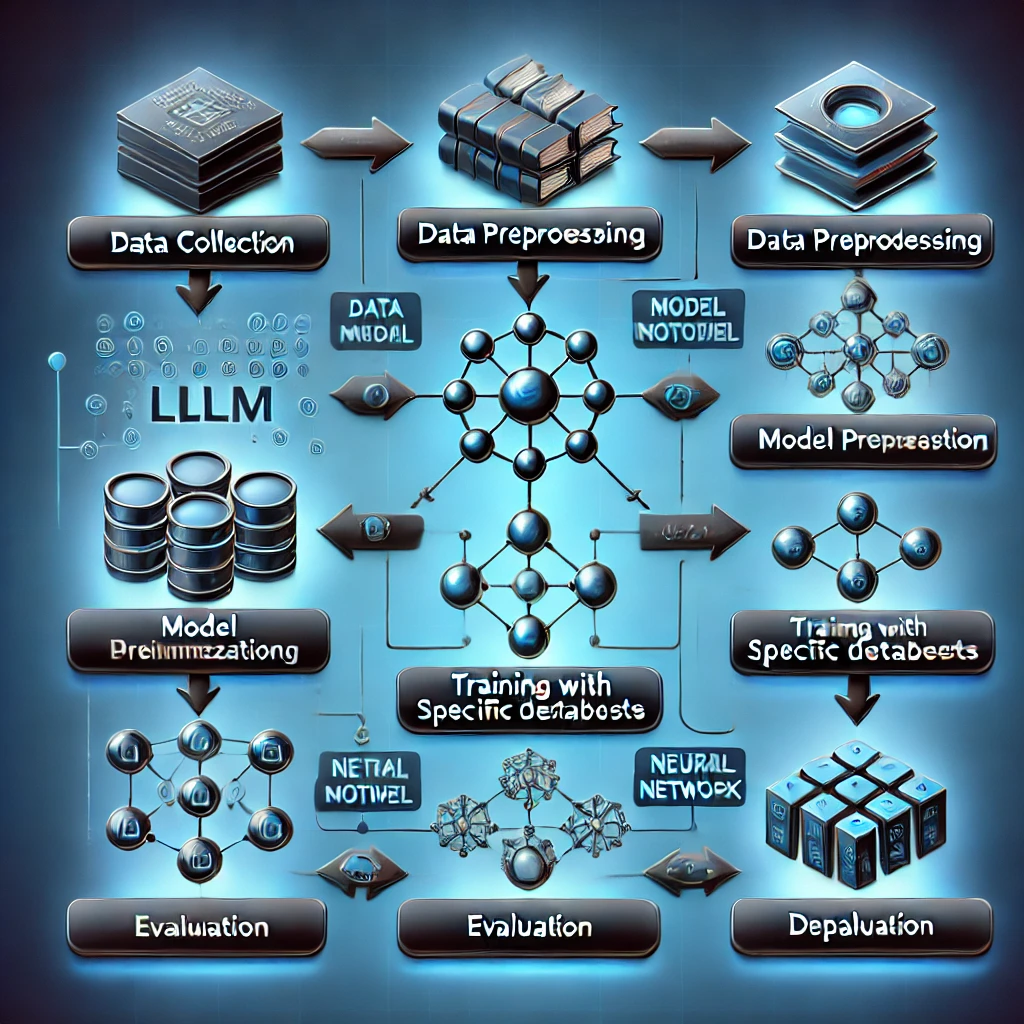

The Fine-Tuning Process

Fine-tuning involves several key steps, which can vary depending on the specific requirements of the task and the architecture of the LLM. Below is a structured approach to fine-tuning LLMs:

1. Define Objectives and Scope

Before fine-tuning, it’s essential to clearly define the objectives. Determine whether the model will serve a universal purpose or target a specific task, such as sentiment analysis or named entity recognition. This clarity helps in selecting the right model and dataset.

2. Model Selection

Choose an appropriate pre-trained model based on the task requirements. Popular options include:

- GPT-3 or GPT-4: Suitable for conversational agents and creative writing.

- BERT or RoBERTa: Ideal for tasks requiring understanding of context, such as question answering and sentiment analysis.

3. Data Preparation

Gather a dataset that is representative of the specific task. This dataset should be smaller than the one used for pre-training but should contain relevant examples. Data preparation may involve:

- Cleaning: Removing noise and irrelevant information from the dataset.

- Labeling: For supervised tasks, ensure that the data is properly labeled according to the task requirements.

4. Fine-Tuning the Model

Utilize frameworks like Hugging Face’s Transformers or TensorFlow to fine-tune the model. The process generally involves:

- Loading the Pre-trained Model: Start with a pre-trained model.

- Training: Adjust the model’s parameters using the prepared dataset. This typically involves using a smaller learning rate to avoid overwriting the knowledge gained during pre-training.

Example code snippet for fine-tuning a model using Hugging Face:

from transformers import Trainer, TrainingArguments, AutoModelForSequenceClassification, AutoTokenizer

# Load pre-trained model and tokenizer

model_name = "distilbert-base-uncased"

model = AutoModelForSequenceClassification.from_pretrained(model_name, num_labels=2)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Prepare dataset

train_dataset = ... # Load your training dataset

eval_dataset = ... # Load your evaluation dataset

# Set training arguments

training_args = TrainingArguments(

output_dir='./results',

num_train_epochs=3,

per_device_train_batch_size=16,

per_device_eval_batch_size=16,

warmup_steps=500,

weight_decay=0.01,

logging_dir='./logs',

)

# Create Trainer instance

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

)

# Fine-tune the model

trainer.train()5. Evaluation and Iteration

After fine-tuning, evaluate the model’s performance using relevant metrics, such as accuracy, F1 score, or BLEU score, depending on the task. Based on the evaluation results, you may need to iterate on the fine-tuning process by adjusting hyperparameters, modifying the dataset, or even changing the model architecture.

6. Deployment

Once the model meets the desired performance criteria, it can be deployed for use in applications. Consider optimizing the model for computational efficiency and user experience during deployment.

Practical Examples of Fine-Tuning LLMs

Fine-tuning LLMs can be applied across various industries and use cases. Here are some practical examples:

1. Customer Support Chatbots

Companies can fine-tune LLMs to create customer support chatbots that understand specific products and services. By training the model on historical customer interactions, the chatbot can provide accurate responses to customer inquiries, improving customer satisfaction and reducing response times.

2. Legal Document Analysis

Law firms can fine-tune LLMs on legal texts, case law databases, and contracts. This specialization allows the model to identify relevant clauses, analyze legal documents, and even assist in drafting contracts, significantly enhancing the efficiency of legal professionals.

3. Healthcare Text Analysis

In the healthcare industry, fine-tuning can be used to develop models that analyze patient records, medical literature, and clinical notes. By training on domain-specific datasets, these models can assist healthcare providers in diagnosing conditions, recommending treatments, and summarizing patient histories.

4. Content Generation for Marketing

Marketing teams can fine-tune LLMs to generate tailored content for specific audiences. By training on previous marketing materials and customer feedback, the model can create engaging blog posts, social media updates, and email campaigns that resonate with target demographics.

5. Sentiment Analysis for Brands

Brands can leverage fine-tuned LLMs to analyze customer sentiment from social media, reviews, and surveys. By training the model on labeled datasets containing positive, negative, and neutral sentiments, businesses can gain insights into customer perceptions and adjust their strategies accordingly.

Challenges and Considerations

While fine-tuning LLMs offers substantial benefits, there are challenges and considerations to keep in mind:

- Data Quality: The quality of the dataset used for fine-tuning is critical. Poor-quality data can lead to suboptimal model performance.

- Overfitting: Fine-tuning on a small dataset can result in overfitting, where the model performs well on training data but poorly on unseen data. Regularization techniques and careful monitoring can help mitigate this risk.

- Computational Resources: Fine-tuning requires significant computational resources, especially for large models. Organizations must ensure they have the necessary infrastructure in place.

- Ethical Considerations: Fine-tuning models on biased or unrepresentative datasets can perpetuate biases in AI systems. It’s essential to consider ethical implications and strive for fairness in AI applications.

Conclusion

fine-tuning large language models is an essential process that enhances their applicability and performance across various domains. By adapting pre-trained models to specific tasks, organizations can unlock the full potential of LLMs, leading to improved outcomes in customer service, legal analysis, healthcare, marketing, and more. As the field of NLP continues to evolve, mastering the art of fine-tuning will be crucial for leveraging the capabilities of these powerful AI tools effectively.

In an era where AI is becoming increasingly integrated into business processes, understanding and implementing fine-tuning strategies will provide a competitive edge, enabling organizations to deliver tailored solutions that meet the unique needs of their users.

Citations:

[1] https://www.datacamp.com/tutorial/fine-tuning-large-language-models

[2] https://www.superannotate.com/blog/llm-fine-tuning

[3] https://www.clearbox.ai/blog/2024-05-06-fine-tuning-large-language-models_and_practical_applications

[4] https://ubiops.com/when-should-you-fine-tune-your-llm/

[5] https://www.ibm.com/topics/fine-tuning

[6] https://www.tasq.ai/newest/unleashing-the-power-of-llm-fine-tuning/

[SEO optimized]

Pingback: Finetuning LLM’s. A practical manual. - evertslabs.org