Artificial Intelligence (AI) models, particularly machine learning (ML) and deep learning models, have become pivotal in various industries. When we talk about models, especially in the context of open-source AI, one of the most important concepts to grasp is “model weights.” Model weights are the numerical values assigned to the connections between neurons in a neural network. They are at the core of any AI model, dictating how the model processes input data and makes decisions.

In this article, we will explore what model weights are, their significance in AI, and why they are particularly crucial in the open-source ecosystem.

What Are Model Weights?

In simple terms, model weights represent the parameters that a neural network learns during the training phase. These weights are adjusted throughout the training process to minimize the error between the predicted and actual outcomes. In a feedforward neural network, for example, weights determine how much influence a specific input has on the output.

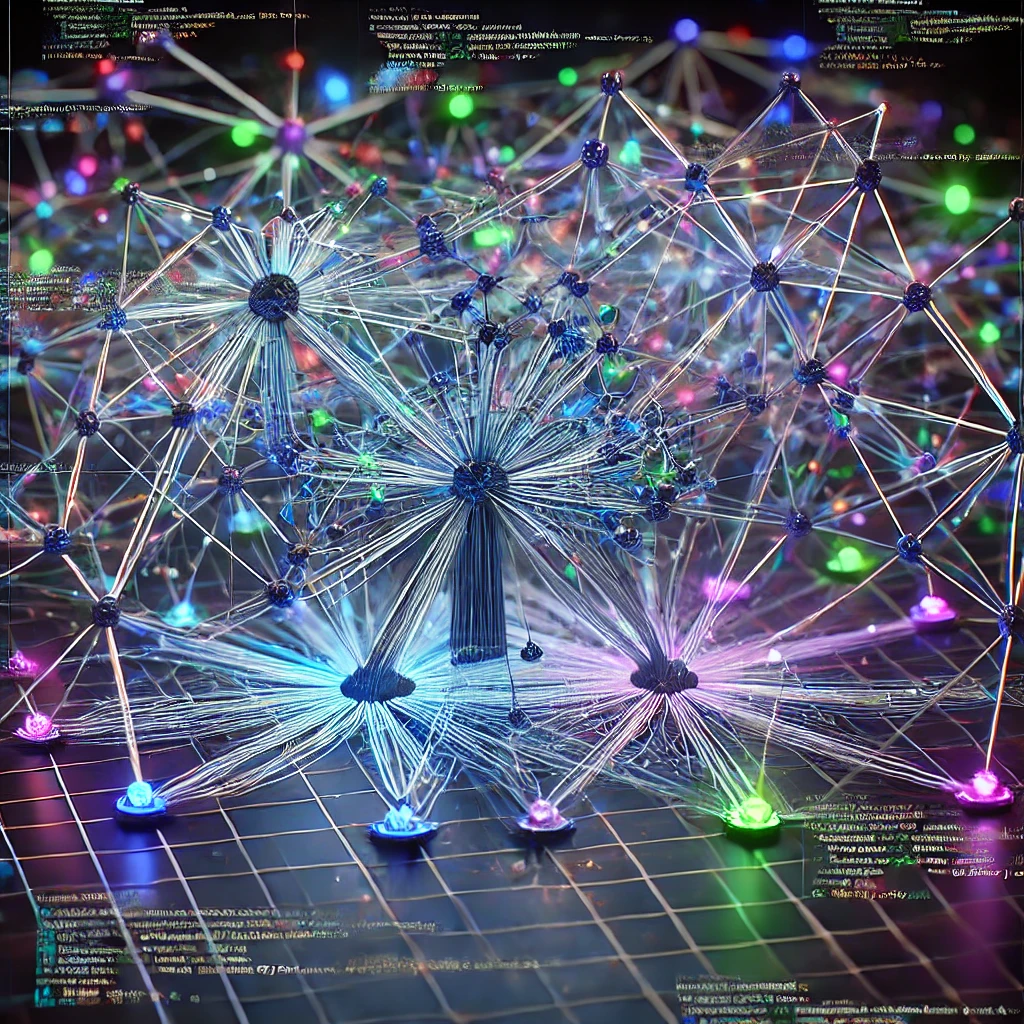

Imagine a neural network as a web of connections. Each connection (synapse) between neurons has a weight. If the weight is high, it means the input has a strong influence on the output. Conversely, a low or negative weight means the input has little or no impact.

Example: Weights in a Neural Network

- Input: An image of a cat

- Model: A neural network designed to classify images

- Weights: Values that help the network decide if the image is a cat or something else

As the neural network trains on thousands or millions of labeled images, it fine-tunes the weights to improve accuracy in classification.

Why Are Model Weights Important?

1. Defining the Model’s Behavior

The entire decision-making ability of an AI model relies on its weights. They dictate how the input data flows through the network and ultimately how predictions are made. Changing the weights changes the model’s behavior.

2. Transfer Learning

In transfer learning, you can use a pre-trained model as a starting point and fine-tune it for a new task. This is possible because the pre-trained model already has learned useful weights. These weights can be adapted, saving time and computational resources.

3. Optimization and Performance

The quality of an AI model’s weights directly affects its performance. Efficiently optimized weights lead to faster and more accurate predictions. Poorly optimized weights, on the other hand, can lead to overfitting, underfitting, or even outright failure in predictions.

4. Explainability

Understanding weights helps make AI models more explainable. By examining the learned weights, we can gain insights into how the model interprets different features and how much importance it places on each.

The Role of Weights in Open-Source AI

In the open-source AI community, weights are the building blocks of knowledge sharing. When an AI model is shared as open source, it usually includes the model architecture and, optionally, the pre-trained weights. These weights provide immense value to developers and researchers who can leverage them to build upon existing work.

1. Reproducibility

Open-source models allow others to replicate research and experiments. By providing the trained model weights, users can immediately reproduce results without having to retrain the model from scratch. This is especially useful for complex models that require significant computational power.

2. Collaboration and Innovation

Sharing weights accelerates innovation. Developers can fine-tune or tweak models for their specific use cases, improving them for unique applications without reinventing the wheel. In the AI research community, sharing weights promotes collaboration and faster iteration cycles, helping solve complex problems more efficiently.

3. Reduced Barriers to Entry

For those who lack the computational resources to train large models, access to pre-trained model weights is invaluable. Open-source weights lower the barrier to entry, allowing a broader community of developers and researchers to contribute to AI advancements.

Challenges with Model Weights in Open Source

While sharing model weights in open-source AI has many advantages, it also comes with challenges.

1. Security and Privacy Concerns

Pre-trained models can unintentionally leak sensitive information from the training data. This is particularly concerning when models are trained on proprietary or personal data. It is crucial for the open-source community to develop protocols to mitigate these risks.

2. Weight Size and Storage

Large AI models, especially those like GPT-3″ rel=”nofollow noopener” target=”_blank”>GPT-3 or BERT, have millions or even billions of weights, making them huge in terms of file size. Sharing these weights requires significant storage and bandwidth, which can be a challenge for open-source distribution.

Conclusion: The Future of Model Weights in Open-Source AI

Model weights are fundamental to the behavior, performance, and interpretability of AI models. In the open-source AI landscape, they are the key to reproducibility, collaboration, and innovation. Sharing these weights helps democratize access to powerful AI tools, enabling more developers and researchers to contribute to the field. However, as open-source models grow larger and more complex, the community must also address challenges related to privacy, security, and infrastructure.

As AI continues to evolve, the importance of model weights will only grow, especially in open-source projects where collaboration and transparency are crucial. Understanding and effectively utilizing model weights is a skill that can unlock new possibilities in AI development.

I, Evert-Jan Wagenaar, resident of the Philippines, have a warm heart for the country. The same applies to Artificial Intelligence (AI). I have extensive knowledge and the necessary skills to make the combination a great success. I offer myself as an external advisor to the government of the Philippines. Please contact me using the Contact form or email me directly at evert.wagenaar@gmail.com!

[SEO optimized]